Graphics dev here, some notes about performance targeting that people might find useful.

It has been designed to match the graphical output in almost every way except output resolution, it still supports all the same GPU features, just targets a lower resolution and has lower memory bandwidth because its not needed.

You can take a game that is running at 4k on the series X and without any changes render at 1440p on a series S and be in the same ballpark of performance.

People here are correct that its not quite as simple as you have 33% of the flops, therefore, you can render 33% of the resolution. But as most games are very fillrate limited it is more like that than it used to be.

Also, they are correct that vram amounts and bandwidth matter, both of which are reduced for series S, however so are the texture sizes.

Assets for the series X package will be at the optimum resolution for a 4k native output, assets for the series S will be at the optimum resolution for a 1440p output, almost half the resolution!

This has 4 major effects,

- Lower memory usage at runtime which is needed due to it having less memory.

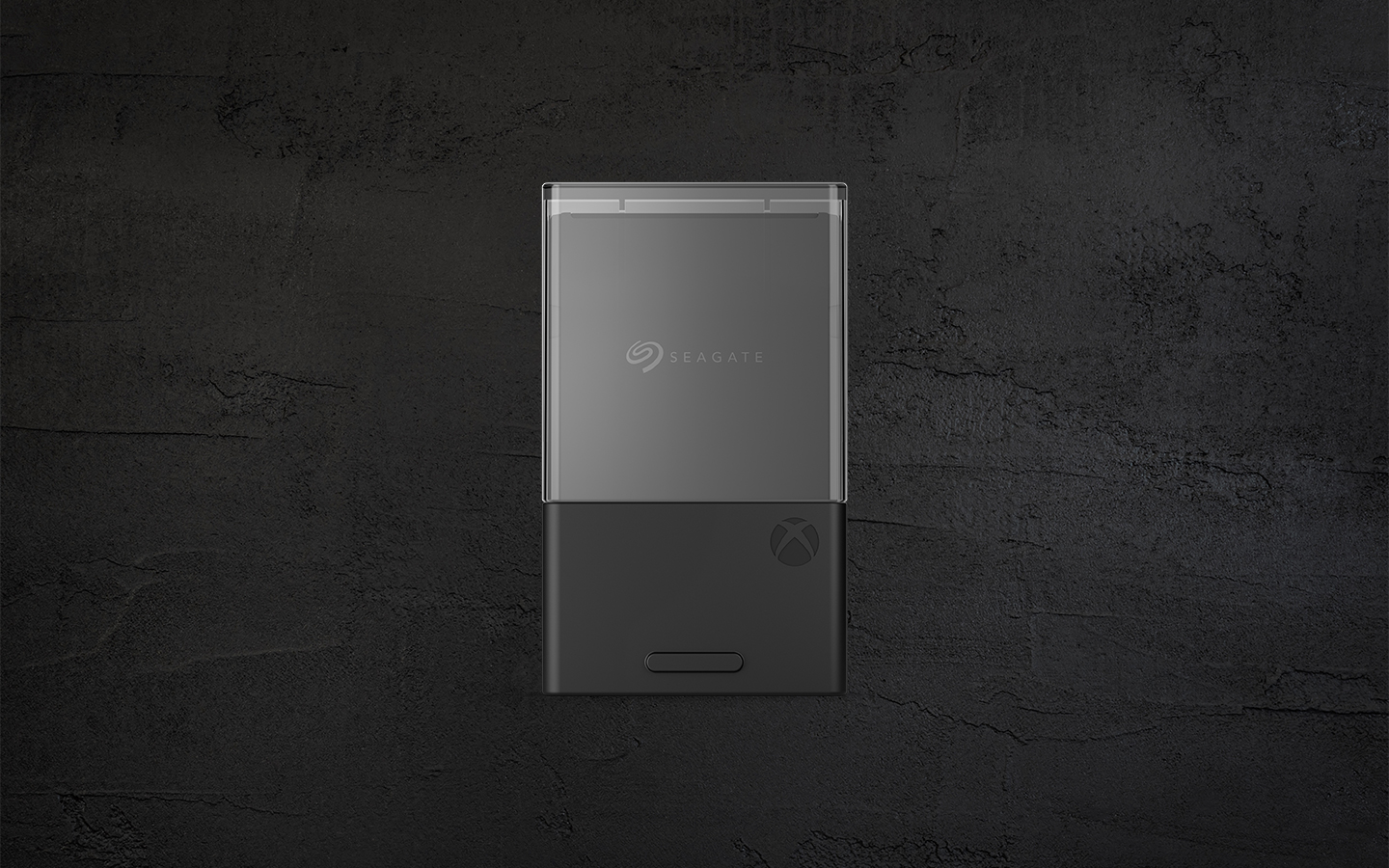

- Lower disk space consumed by a game (could be as much as 40% less) which is great because it has half the storage size.

- Lower SSD bandwidth required (which is good because the SSD is also slower as it uses fewer channels to keep the price down)

- Lower memory bandwidth required both for moving the textures and for simple fill operations.

So you can see that by scaling back the Ram size and speed, and SSD size and speed, and the GPU speed you end up with a console that can perfectly handle 1440p content as long as the content is mastered for a 1440p experience. This isn't as hard as you think as final output sizes for builds is done automatically by most content processing pipelines anyway.